Therefore iterative, experience and practice-led measurement belies a different type of culture and mindset around how we continually develop and improve services. Our work with organisations wishing to move to a more preventative and early intervention approach has highlighted a need to move from a culture where frontline staff are gatekeepers of a service at the time of crisis - following process and activity/time targets - to one where frontline staff are problem-solvers, doing what they feel is best to achieve the right outcome for the person. In the latter world, frontline staff have control over their service, using impact data and their own trusted feedback to see how it is working and being empowered to change and improve it. In a constantly evolving world, a service re-design is never complete and impact measurement is part of delivering a constantlyimproving service.

But it is not that easy to roll back decades of public sector management and create a mindset that the RSA calls ‘think like a system, act like an entrepreneur’.2 Leaders need to promote a reflective and learning culture so frontline staff can actively look for feedback from users, be trusted to give it themselves, and make improvements to the service they deliver. Service design, through its involvement of staff in the design process, and the artefacts it creates (e.g. a problem solving conversation guide rather than tick-box forms) is a powerful vehicle for the culture change.

And what of users? They are also included in the audience in Table 1. As well as developing and improving services, iterative impact measurement can be part of their delivery. A preventative approach also requires greater self-awareness and resilience-building within users. Impact measurement should not be seen as a one-way stream, with organisations s ucking up data and making decisions. Rather, gathering feedback and data and relaying it back to users (on its own, as a selfquantified visualisation or with additional tailored advice) can be part of the service offer itself. The act of recording data about your health can prompt you to adapt healthier living behaviours. Research and implementation are intertwined through apps like ‘mappify’ that pulse checks people’s health, or ‘Colour in City’ which used digital technology to collect people’s experiences of their city, prompting behaviour change.

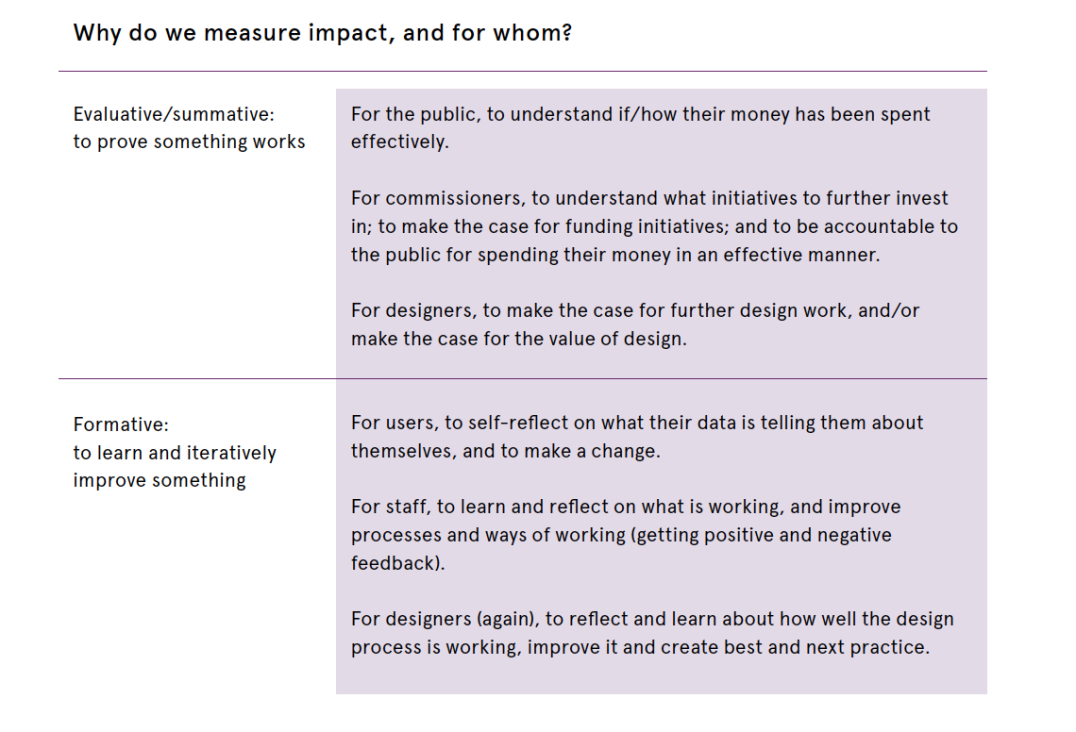

The idea that a service is static - is designed, evaluated and stays the same - looks increasingly out of date. Services, both their digital and face-to-face components, need to change and evolve with the systems that surround them. As well as designing the service, designers need to upskill frontline staff to lead this iterative change. Perhaps more importantly than summative evaluation (proving that something works) is formative evaluation (learning what works and improving what doesn’t). In traditional frameworks, evaluation comes at the end of the process. Instead, we need to see impact measurement as part of the delivery of the service itself, with frontline staff and users looking at the variety of iterative measures, reflecting on how well things are working, and shifting their behaviour and making changes if they are not.

Share your thoughts

0 RepliesPlease login to comment