Automated transcript

Introduction

---

Vanessa: Today we will speak about research repositories, tips on how to set up, maintain a research repository for yourself, your team and your company. Within the next approximately 30 minutes, I'd like to introduce myself and the topic, explain what a research repository even is and what benefits it can provide, as well as give you tips if you're interested in establishing a research repository.

Lastly, I will summarize the key takeaways for you before we dive into a hopefully lively Q& A discussion, which allows us to have fun. Some interesting conversations.

Who is Vanessa?

---

Vanessa: All right, before we dive into the topic, I would like to give a brief introduction about who I am. I work as an experience consultant at evux in Zurich since 2022.

I did start my career a few years ago at UBS, and I was also working in a marketing department of a real estate agency. Rehabilitation Clinic before I became an experienced consultant. I had the chance to study service design at the University of Applied Sciences in Coeur and during my studies I became incredibly passionate about service design and it's great that I get to do that now in my job and at AWOCS my own.

Work also consists of planning and executing user research, develop concepts up to low and mid five prototypes, as well as process and requirements engineering. It's very diverse and I'm really glad that service design is still a big part of that. Of course, I'm not alone. We're in total seven experienced enthusiasts trying to make the world a bit better every day.

for having me. At AWOOCS, the core of our work is the human centered design process. We work together with the people whose experience we really want to enhance with a particular product or service. Be that employees, customers, staff. And if we look at the process, first, we need to understand who those humans even are and what they need in that specific context that we're looking at.

Second, we have to define which needs we want to cover before developing a solution that meets those needs. And Most importantly, we need to evaluate whether our solution fulfills those needs, and if it's not the case, we iterate the solution until those Needs are met. Now, as you can imagine, this requires us to talk to those people, to conduct research, to gather data in a structured manner.

Generative vs. Evaluative Research

---

Vanessa: And when we talk about research, user research, custom research, it's really important that we differentiate between generative and Evaluative Research, and you can put that on the Human Centered Design process. You can also put it on the double diamond, which we know in service design, we usually use in service design, and the important thing is generative research helps you identify opportunities and ideas, while evaluative research helps you figure out if your existing solution is on the right track. Oh my goodness, sorry for that one. If we look at the double diamond as well as the human centered design process, we can say that in both cases it becomes evident that generative research happens earlier and has other objectives. When we start in the beginning with generative research, you really start from scratch.

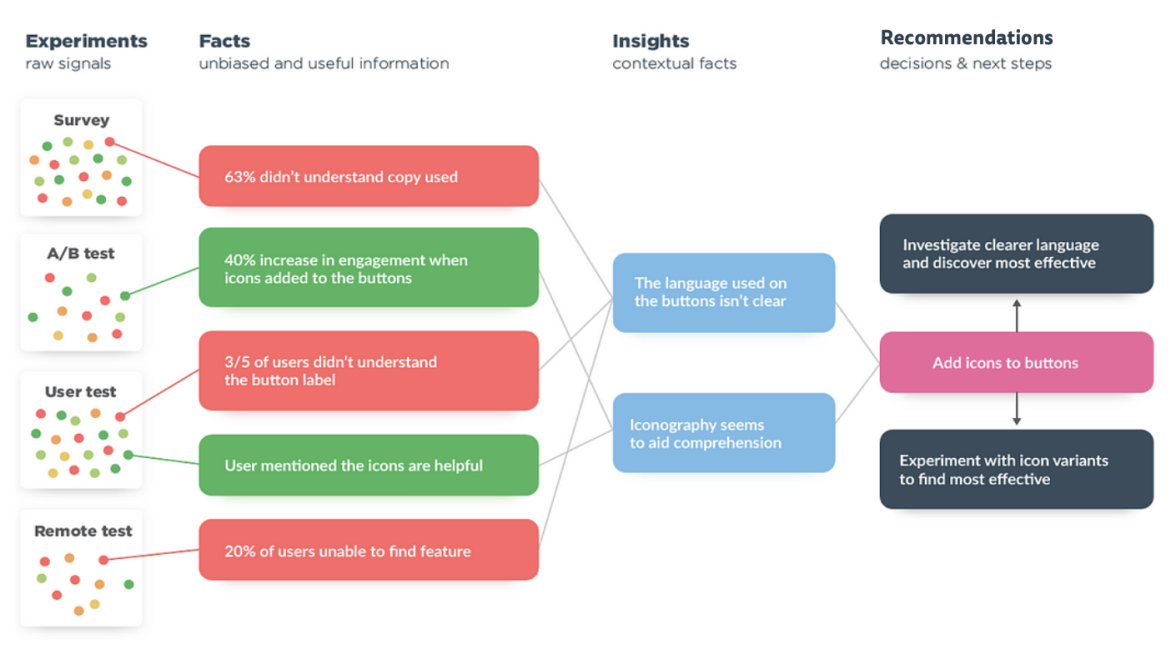

You have to understand it first. You it's not as straightforward, mostly the process as it is when you go into evaluative research. There you usually You know, a concept, a prototype, some scenario, something existing, some hypothesis that you have that you want to test. That's really the difference. Now, when evaluative research is being conducted, you break it basically down into, let's say smaller parts or its constituent parts, and those are called atoms. You can use those atoms, that's why it's called Atomic User Research, to then create facts and gain insights, which then lead to a recommendation. That's basically the idea of this concept. Classic examples of evaluative research are surveys, A B tests, user tests. And so on. Now, why am I even telling you this?

It's important to know that many of the research repository tools which are available out there use some way or some variant of this concept as their underlying structure. Some of the solutions do that quite rigidly, to be honest. And others allow more flexibility so that you can even conduct generative research there.

That's really important. You have to know what am I using my research repository for? Am I only doing evaluative research? Am I only doing generative research? Am I doing both? How much flexibility do I need?

Challenges in Research Repositories

---

Vanessa: One great example right here is I had the chance to test a solution which is out there which is called Cleanly with a customer of ours they called me to do an expert review on the existing mobile app.

And I thought great, let's use the opportunity. Let's try it with Cleanly. Let's put those facts in there. Let's generate those insights in there. They're used to it. They can make it. And I quickly realized that even an expert review, which tests an existing mobile app in this case, is quite hard to build in that research repository or visualize in that research repository.

Because usually when you do an expert review, you use certain heuristics which are out there and then you test the mobile app and see, okay, this heuristic is not met. In certain cases, and then you say, alright, this is the heuristic which is in breach, this is our insight, and this is the recommendation.

And I thought great, let's take the insight, let's take the heuristic and let's take the recommendation and we had to switch that up because we were otherwise not able to build it the way we want. It really depends on what experiment you do right here at the beginning to know whether it's possible to create facts, insights and recommendations.

Now, if you would like to read more about the concept of atomic user research, we do have an article about it on our website, so feel free to screenshot this slide and read some more about the topic. after the webinar, please. And the article is in German, but you can also easily translate it with DeepL, Google Translate, whatever you use.

But if you're more interested in that, feel free to read the article. All right, now let's dive into the research repository part. Let's say this is Mark. Mark is a team of one, and as long as He's the only one conducting research. He's basically his own source of truth and he knows all the research that he has done himself.

Stakeholders know if they want to know something, they ask him he has a certain process implemented that works for him. He works along that process and it's not really, there's not really necessarily a need to assure quality with a lot of measures because he's really yeah, responsible for his own quality.

Now, let's say it's not only Mark, but it's a whole team of researchers. Then it's not as simple anymore, because what happens is you suddenly have many studies being conducted at the same time. And you have maybe different research styles, and you have to have a quality assurance process all of a sudden.

You have to know how to make sure that the stakeholders are able to use your insights. Stakeholders don't know who they ask. Maybe you don't even know what your colleague is doing and it becomes a bit more complicated. And what is a research repository then? Why makes why does a research repository make sense?

How does a research repository help? And the great thing is the research repository usually takes all of your, let's say, PDFs, PowerPoint presentations user interview protocols unmoderated videos that you might have, which are just, on a drive flying somewhere being maybe maybe you have certain folders, but you don't know if you, you have to look in folder one and then you have to look in folder two to get the overview.

And a research repository basically takes. that and offers you a central and reliable source of knowledge. But not only that, it's like a library, but it's even more searchable because it's digital. You can easily globally search across your whole research repository. So let's say, okay, we want to know everything we did in banking.

So you could just type in banking and you get everything you've done in the last few years Whatever studies about the topic of banking. It also becomes more readable, easy readable, and you have the possibility to really give a certain structure, to have an index, to sort everything you want to sort, and of course, then, you Use it for another time.

You could do a meta research study, for example, where you say, okay, in study one, we saw this, and in study two, we saw this. So what does that mean for another study, for example? So a lot of secondary research, which becomes possible, but also just having everything in one place and everything, having everything available is really the magic that happens when you implement the research repository.

Benefits of Research Repositories

---

Vanessa: The benefits. First and foremost, you have one single source of truth. You have everything in one place. It's all centralized and everyone knows where they have to look for certain information. Now, the other benefits. can be benefits if you want them to be a benefit. So one thing that you might want when you implement a research repository is a central point to find your data list.

So it's about searchability. If you want to make it accessible to stakeholders, you can. It gives transparent results and also consistent results because everyone comes and does their research. Implements the same research process basically and the insights are always generated in kind of the same way.

And it enables the management of research activities and in the end also the scalability across company. Why that? It prevents redundancies. So if you don't know that your colleague just conducted that research and you're going out conducting exactly the same research, that costs money. And If you would just know they've just done it, I can access those insights and I can work with them.

It's better for everyone. And the other thing, as I said, is secondary research or meta studies. If you've done 10 studies in banking about, let's say, online banking apps and I don't know, paying a bill, for example. You can go there and you can look across all those projects and generate new insights from the insights which you've gained in each of those projects.

Tools and Solutions for Research Repositories

---

Vanessa: Now, there are a lot of providers out there. There's a lot of tools out there. And I, for me, it's important to give you a little disclaimer saying I'm not here to say One tool is better than the other, or it's not any kind of ad for a certain tool it's just really my opinion, my experience, and a neutral view on those tools.

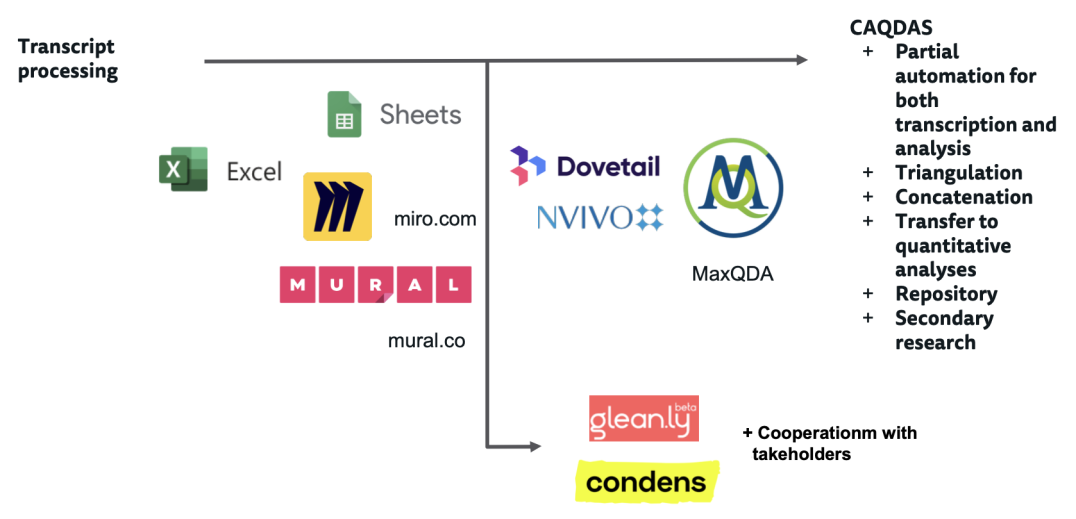

If we look at this slide right here, you can see at the far left, you have tools as easy as an Excel sheet where you maybe protocol where you write down all your things that you've seen in, let's say, an user test and you have your protocol right there and you maybe take all of those insights or all of the data that you've gathered there, put it on a needle or mural board and start to cluster it there.

Works perfectly fine. The problem is on this far left right here, once you've used the data and once you've presented your insights, the data is basically dead. It just remains in that one folder and nothing happens anymore. It's not as accessible. If we move to More towards the right, we have systems called, with the beautiful name of COCTOS, that's Computer Aided Qualitative Data Assisted Systems, I think, Analysis Systems.

And so you see there, you get a few more features, like you can also have a repository right there, you can do some sort of triangulation, but what's missing there is still the Cooperational Stakeholders and the collaboration with other colleagues, for example. And down here, we have a few examples of tools which allow for that collaboration.

One is Cleanly, which I talked about before, and the other one is Condense.

I really love this map. It's not the most readable one, to be honest, but userinterviews. com issue a UX Research Tools map every year. It doesn't look like this every year, but that's, I think, the 2023 one, so it's the newest one. And basically what they do is they cluster research into some parts, processes, steps that happen and they add those tools which are available out there.

So if you're looking for a tool and you don't know where to start or if you just don't feel like, okay, Googling and maybe getting just one, paying the highest for ads, you can go to userinterviews. com and you can download that whole list. Let's have a look to the Grand Duchy, or Duchy, I don't even know, of Insight Management.

That's where we also have our Condents. Once again, we see our MaxQDA and Dovetail, which we've seen before. We have Aurelius here Gleanly here. So they're all like in the same area. Now, why is Insight Management so important? Why do you want to share your knowledge with stakeholders?

First and foremost, They want to know what you're doing and they want to find out and see for themselves what you've discovered. And Oftentimes, we hear a discussion about democratizing user research and many of the people who want to democratize it have the problem that they want to democratize the methods and make methods available to stakeholders.

Now, the problem is a tool, a fool with a tool is still a fool, so they might have a good tool, but they still don't conduct research the way we would. And instead of democratizing the methods, it's way more interesting and way better to democratize the insights, which means you want to make those insights more available to your stakeholders.

And these are some of the tools, especially right here. which allow us to do I had the chance to experiment and test out Aurelius, which is one of them that we saw condense and cleanly, as I said. And we also have a article on our blog where we state all the pros and cons of each of those tools. As I said before, I'm not saying one tool is better than the other. I'm not saying that one of them, none of them are sponsored.

It's just a ones that we tested and where we had our experiences and where we tried to make that more accessible. So you don't have to go through all of that, but instead read and know for yourself, okay, what can this tool do? What did AWUX think that was good or what I thought was good or wasn't as good?

So feel free to screenshot this as well and read along for a later moment.

Tips for Setting Up Your Own Research Repository

---

Vanessa: Now, tips for your own research repository. I structured it into different steps, and the first and foremost is to know your process. I said it at the beginning already. It's about knowing whether you're more into generative research or more into evaluative research, but also just how are you conducting research today.

And based on that knowledge, because usually we do it quite implicitly, to be honest, and when you have to sit down. And write, okay, I'm doing step one, two, and three. It's not as easy. And sometimes it's like, why the heck am I doing it my way? Or, Oh, interesting. I'm doing that differently than maybe my colleague does.

So it's really important to know your process before you start. And then based on that, you can define your requirements. What do you need to. implement or keep that process. What are requirements that you have? You can then search for potential tools which are available out there and then what I really recommend is test.

We thought Gleaning was great until we got to try it with our client and we had to say, okay, no, it's just not working for us at all. But we didn't know until that point where we really tested it and had a real case where we're able to implement it. Go through the whole process. Sometimes it can happen that you have to go back and define new requirements, for example.

That's only if you use a tool which is out there already. Of course, if you decide to, Develop your own tool because you may be in software and you're able to do it yourself. You have to have your requirements first, of course, before starting to develop. But usually it's quite iterative, so you can go back and forth.

To give you an example of some requirements that we had before we started, those are not necessarily, or one was really the flexibility because we said, okay, we're doing a Generative as well as evaluative research. For us it's important that we have some sort of flexibility. Another requirement for us was that we can use the research repository and the tool for all the experiments we do.

So let's say interviews, scenario tests, usability walkthroughs, and so on and so forth. You name it, whatever we do, we wanted to be able to insert that into the research repository, because otherwise you only have one part centralized and the other one not, so it's just not. Reaching the goal. And some other requirements we had for example was that we wanted to get all the experiments out of the tool if we decide that it's not for us and we're no longer using it.

So an export function was really important for us. Another thing was the location of the servers. Are they compliant with the DSGVO legal parts, so let's say data privacy and where are the servers located we said, okay, we want to take it into consideration if we can, and those were some of the requirements we had.

Quite a list, to be honest, and then we started to look for tools and it was really like that. I went in with my requirements, I searched for those tools, I checked whether they're meeting those requirements or meeting those needs, and if not, we haven't even tested them because we're like, okay, it's not worth testing, and that's why we ended up with three tools being condensed cleanly and already as in our case.

Now, if you've done the analysis, it's about to set up. And what we did and what I really can recommend is launch a pilot phase. Just say, okay, let's do, for example, we started with usability walkthroughs and we said we want to do five walkthroughs within the tool that we've chosen to make sure that it's really what we need.

Before, we're inserting all of our experiment types in there and then we're saying, okay, After half a year, it's just not worth it, and we said, let's start with one, let's launch a pilot phase, and let's do the next five usability tests with that tool, and then we'll evaluate that. So I can really recommend launching a pilot phase.

Then, of course, you have to have some sort of goals or KPIs. For us, it was really important to, standardize everything as well, to become maybe, you know better, faster, more accurate make less mistakes, for example. So you have to know what you want to reach or what you want to change when you decide for one of those tools.

Another really important thing is taxonomy and global tagging sets. Most of those tools allow you, as we do with every research, to code or tag your insights in order to be able to cluster them afterwards. Interestingly enough, we found out that in the science world, they use coding just when they, give that code piece to, to some information, usually in UX or in those tools, they use the term tagging.

In the end, it's exactly the same and what we realized there is, for example, with the global tagging sets, in the beginning, we had two different types of heuristics, which were both within that tool. So we used the ISO, the dialogue principles, and we also used the Nielsen Norman heuristics, And they were both in that tool, and then we realized, okay, no, hold on, if someone is using the Nielsen Norman, and the other one is using the Dialogue Principles, and they're both, they both have a heuristic, for example, about how does the system work.

and support the user in his or her task and we were like, okay, if we're wanting to search for, let's say, everything we found out about that user support, and we don't know that someone used this global tagging set and the other one used the other one, we're running into a problem. So we had to decide for one set and say, okay, from now on, We're just using, for example, the Dialogue Principles.

So that was really important to know what tags do you usually use? The same with usability walkthroughs. And just because we chose those first, when we have data, which comes in there, We see, okay, this was really a usability issue, which we have to prioritize we had our certain numbers which we gave, so one was not as bad and three was really bad.

We had to define those as well and insert those into the tool as well. Then another thing is create templates it depends on the tool. Our tool, for example, was able to create templates, so let's say whenever you start a new project or a new experiment, so whenever you go in and say, okay, I'm doing a new usability walkthrough and the structure was basically the same.

You had a first page and the content within the first page was part of the template. So I inserted a checklist there saying, hey, when you do a usability You have to think about A, B, C, and D, and you can just go through basically a to do list. And you know what's going on, and it's all built the same. So that's one part of the standardization.

And what we did, and what I highly recommend as well, is to define a product and a process owner. So don't let your whole team change, for example, the global tags, just because they feel like, I need another global tag. It's really important that only, One person is responsible for changing those templates, changing those tasks, tags, and it's really important that it still complies with your process, because if you're changing the process, you have to think about it once again and think, okay, do we really need that?

Does that make sense? Why are we changing it? And in our case, those are two different people. I'm the product owner, basically, and we have someone else as our a process owner, which assures some sort of quality as well. So we know, okay, this makes sense from a product view as well as a process view. Now, usage and maintenance.

You have to be aware that when you implement a research repository in all of your colleagues or your whole team has to work differently, has to work with a different tool, has to, do some things other than they did before. And that's really difficult because. just especially with research repositories and those tools, your work becomes evident.

You're forced to work evidence based because if you don't have your fact, you cannot create a recommendation because where does that recommendation come from? And that in the end, not every researcher likes it. So there is really a component of change management. You have to be aware that not everyone is greeting that tool with open arms saying, awesome, I've waited for this for my entire life.

So there's a lot of change management going on. What helps there is to set up and document guidelines. We set up a notion page, for example, where we said, okay, why are we using this now? How are we using it? And how long is the pilot phase going to be? What's going to happen afterwards? Just to let everyone know.

This is why, what we're doing, this is why we're doing, and this is how we're doing, or how we're doing it. The next point to help a bit with the change management is to ensure a good onboarding.

Team Introduction and Tool Demonstration

---

Vanessa: We had, I think, half a day where the whole team came together. I introduced the tool, I showed them how it works, what the idea behind it is, and I even recorded a small video of being a user myself, and they basically had the chance or got the chance to protocol or write down what I've done in that video and just to get into it without having to do it with a customer straight away.

Collecting and Managing Feedback

---

Vanessa: The fourth step would be to collect feedback. So maybe there are some edge cases or maybe there are some difficulties from the team which you were not aware of as a process or product owner. So it's really important that you have some specific feedback Base where you can collect the feedback and where you can also take care of it.

So also let's say if they desire some kind of change or whatever, and that you collect that in a systematic manner.

Monitoring and Grooming the Tool

---

Vanessa: And the last thing when it comes to usage and maintenance is really monitoring and grooming. Sometimes people forget to, close a project, for example, or there's tags which are not used, so you have to think about do we need those tags or don't we need those tags and you just have to make sure that everyone uses those tags.

the tool that they get along, that they put in the right information. So there's a lot of monitoring and grooming as well. It's not very time intensive or anything, but you have to do it from time to time. And it's good if the product owner does that too, because then there's only one person responsible for it, and you have it a bit more structured.

Key Takeaways and Scalability

---

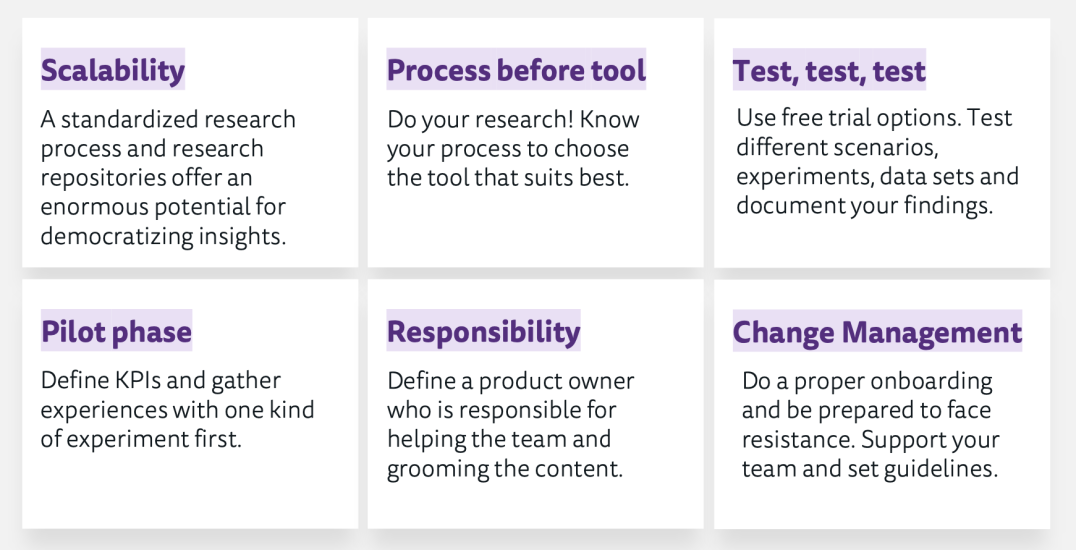

Vanessa: All right, looking good, I'd say I've got probably a few more minutes, so let's go into the key takeaways. Those are really the key takeaways which I want to give to you or which would be great if you take from this webinar, basically. And one is really the scalability. So if you standardize your research and if you implement the research repository, it offers that potential for democratizing those insights.

And that really allows then for the scalability. So you can make research way more accessible within your company. Another really important thing is.

Process Before Tool and Testing

---

Vanessa: Process before tool. If you implement a process, a tool, that doesn't work with your process, You're not going to use it. It's there to fail, to be honest.

So do your research first and know your process to then be able to choose the product or the tool that just suits best. Another thing is really testing. test and test once more. And many of the providers out there use free trial options. For example, I've tested different scenarios, different experiments.

I've had a few data sets from other clients, which I got to use and which I just got in the end. to the tool and I tried and tested and thought about, okay, what are we doing? How are we doing it? Does that work or doesn't it work? And what's really important because that takes a while and sometimes you forget why you took or made the decision that you made.

We had the moment where we said, okay, what was the problem there again? And it really helps if you document that and say this works great in this tool, this doesn't work as great in this tool.

Pilot Phase and Defining KPIs

---

Vanessa: The fourth point would be the pilot phase and really just define your KPIs and gather experience with one kind of experience first.

Be that Interviews, speed ed, usability walkthroughs. Don't try to do everything at the same time. It's not about a big bang. It's about getting to know the tool, making sure it works for you in the long term before committing completely. Then the one thing with the responsibility Define a product owner who's responsible for helping the team, grooming the content, and just be there and take care and be the ambassador of your research repository.

And the last but not least point on this list is the change management.

Change Management and Onboarding

---

Vanessa: As I said, do a proper onboarding, be prepared to face resistance. Not everyone is going to like it. And try to support your team, set up guidelines, set up, some FAQ, whatever that helps them to use the tool and to get to know it and to make the change or the transition a bit easier.

All right, I'd say we'll go into day Q& A. But if I'm allowed, I would like to show this slide first. If you want to keep in touch, feel free to connect with me, feel free to follow Ewoks on LinkedIn. We're sharing there a lot and what's going on also with the research repositories. And if you want to accelerate your research, reach out to us.

I've made quite a few experiences and I'm happy to share or help teams when they don't know where to start. Start or have to define their process first before they are able to choose the right tool. So thank you so much for your attention.

Daniele: Awesome. Hey, thank you so much. That was a very good presentation.

You I feel you know, very grateful that you've been through all of this shit. For us, speaking of shit, I'm just going to make a little as a parenthesis, we had a bit of shit happening at the start with it's really interesting to see, someone going through all of it, and asking herself all of the good questions and then coming with just These are the few things that you should know.

Q&A

---

Daniele: I have a ton of questions for you. But my first question is a very Swiss question. Which is, I know the regulation, whenever it's a data privacy, Switzerland is a bit different than Europe, than the States. Can you maybe just say a little bit about why you chose which tools you chose that you feel fit with the privacy laws that we have in Switzerland?

Choosing the Right Tool for Privacy

---

Vanessa: And so the ones, or the few tools that we checked, were a few, were, I think one was from Australia, one was from the US, and one was from Germany. And we decided to put one from Germany, just because they have their server standing in Frankfurt, and they even say on their website, and we, Take a look at the D-S-G-V-O and we're taking care of that data.

But there are a few things which we are doing differently because you can really tell in America it's not that bad. They put in the names of the participants, they upload the videos with in that tool. They make it very. They really put that data into it and for us it wasn't that much of a problem because when we conduct research it's always anonymously.

As a stakeholder afterwards or even we as researcher we don't know which participant was saying what phrase in the end because it's all anonymously and if we do it right within the protocol and we upload that anonymous protocol already up there it's not that bad. The only thing which we did think about, but wasn't a problem yet, is with our banks.

When we have a difficult client, for example, or someone who we know that they're especially taking care of that data we make sure that they're all right with the fact that we're using the tool which we're using to conduct our research.

Vanessa's favorite tool

---

Daniele: Yeah, I think that's a very good thing, because I'm so if we play favorites, I have my favorite is Dovetail at the moment because it's one that I'm used to a lot, but at the same time, you feel the kind of Australian slash US vibe, with kind of data privacy and this kind of stuff, which you ask yourself, Oh, is there an alternative in Europe or maybe in Switzerland that is more near to our privacy, maybe.

Culture, and maybe even those. So what's your favorite? Because you showed us a few of them. And you were really smart to not say which one is your personal favorite. But I'm very interested, because you went through a lot of those. And obviously there might be a favorite and the question is then why?

Why this one is the one that you finally use at evux?

Vanessa: We're working with Condance now, so we settled on that one. And one reason is really they are a German company, so unfortunately we haven't found a Swiss solution, but at least a German solution. And the interesting thing is even let's say transcripts and stuff, and They don't work, as we know, they don't work with Swiss German as well, but maybe Condance even has a bit more, interest in making it work for us as well.

They're just closer to also the German language. And you get to, if you have a bug or something, or if you have any requests, it's all in German. So it makes it a bit easier for us. And the other reason was really that We have the flexibility to put pretty much every experiment we do right into the tool right there.

And we also have the ability to export those complete projects again, which is really important to us. Those were a few of the things and it's really, it's not as rigid and for us it works perfectly. perfectly fine. And that's why we settled with Condance. But as I said, it's not that Condance is better than Aurelius or Dovetail or whatever.

We just found it works best for us. And I have to say, I really love working with it. And I don't want to go back to be honest. So it's really something great. And the more we use it, the more we like it. So that's really great.

Daniele: I see you have favorites. You have favorites, which is good news.

How long does it take to build a research respository setup?

---

Daniele: And so how long did it take you, to go from, oh, we think we need this research repository thing to now we have our favorite and it starts to pay off?

Where you're all set up, now it's not any more onboarding, it's just a running thing. How long can we expect this? Because I assume not everybody, nobody will just jump on one tool. They will do the same process that you do. Maybe with, a little bit less length because you provided a lot already, but I assume that they will still test a few solutions.

So how long did that take to you?

Vanessa: It took us quite a while to be honest, but I did it next to the daily business as well. So maybe there were times where I had more time to test and sometimes you even get quite small few trials. So maybe you have just So you know, okay, within those two weeks, I have to make sure that I test everything I want to test with that tool.

So that's really something important. You have to do a bit of resource planning, basically. And I think the wish to implement such a research repository came quite early. But when I started, I think the analysis took me, Probably half a year, and then it took me another half a year until we were ready to say, all right, now we're working with it, now we're ready, everyone's onboarded.

So it was almost a year of process, but also I used every chance I had, as you said working with the client client's tool and also, try maybe one more or take a little more time for the analysis, but it is quite a strenuous process and you don't want to do that, every year or every other year.

But I think it's worth thinking about it. I think it's worth taking the time for the analysis because when you decide for a tool and you commit for it it's really, it helps. Thank you so much. And for us we, yeah, we really like it. And I think we're happy with our decision as well.

Daniele: I love how, you took the time and especially one thing that I find important in here is that, you did that next to all of the other things that you had to do.

Which is the case of any person who will do that, it's a very good example because I feel, whenever they, you go on, on, on the websites of these tools, they say, Oh, set up in 30 minutes. It's yeah, the first set up. Onboarding my boss, onboarding my colleagues, onboarding my clients, making sure it's really the best tool, trying it out checking with compliance and stuff, it takes a lot of time, and it's not just the time of doing it.

It's also the time of waiting for feedback, waiting that somebody, somebody might be in holidays and this kind of stuff. So this notion of seeing, Hey, this is a process that will take one year. Just. Plan to plan for that. I think that may, that will make a lot of people relaxed, maybe a bit scared, but relaxed in the same time knowing, Oh it's going to work.

It's gonna take time, so I don't have to solve that within the next 24 hours. And I think this is a very important learning here.

Vanessa: Exactly, yes. And what also was special for us, we said, all right, our pilot phase isn't just, half a year or whatever. We said, we wanna do five usability tests within the tool, no matter what the timeframe is.

We thought, all right, we're clever. We're taking the usability test because we've had quite a few in the past. And then we had. This kind of time frame where there were just almost no usability walkthroughs. We've done everything else, but no usability walkthroughs. It was like, okay, nothing's happening.

And we had to wait for the next usability walkthrough to put it in there. So it also really depends on when you launch your pilot phase, how fast that you're going right there and how much time you need to Evaluate whether your needs are met or whether your goals are met with the tool.

How hard is it to onboard a boss, a colleague or a client to a research repository?

---

Daniele: And so you did this whole process, and you say the onboarding part is really a big one, and the change management part is obviously a really big one, which is the case for any service design project, which is really good.

And I'm asking myself, I have two questions. The first one is, what were the arguments, or how did you onboard, your team, your boss, etc., and how did you onboard clients? I think these might be two very different conversations. Can you maybe give us a little bit of information about that? .

Vanessa: Onboarding the clients wasn't as much of a problem because none of our clients have direct access to repository.

We do have, or incontinence, at least you have, or you get to share certain artifacts. So let's say a report or a whiteboard or whatever, and there it's so intuitive that it's usually not. that much of a that big of a deal, to be honest. And most of what we do in contents just happens in the back anyway.

So there's no need for clients to, move around there or see everything. And when we think, okay, now that's something you want to share. And then it's usually just Hey, here's the link. Take a look. It's read only anyway, so they can't really, break something. And there's not much interaction other than browse on that one page.

With my bosses, it was really more about giving evidence, showing, or like saying, okay, listen, I've tried this, and this, and I found this, and this. That's why I think it's the right or the wrong solution. So it was really about, just giving the facts and figures and share my experience.

And with my colleagues, I've had some which thought, Awesome. Let's do it. No problem. I've had some who said why do we need that now? Why do we have to do it differently? It's not what I'm used to. It's just, I don't know whether it's really helping me or they were just like, I've done it different before, so I don't know if I can do it or I don't know if I want to do it that way even.

And that's really what We weren't struggling that much, to be honest, just because, in our sector, it's just, you're, So open minded it's usually another problem when we're used to change And so I think it was a bit easier for us anyway Because we're just so used to change that wasn't much of a change either but and there were some moments where we're like, okay, I don't know if It gives me anxiety because I don't feel like I can work with it or it helps me or it makes life easier just because they were used to We've used Excel before, so all the protocols were in Excel, and of course, it's something different if you have to use a software for it, if you have a different user interface, if you have more features even, that's something you have to get used to, and that's why it was also really important to me to show them I've made a whole guide saying, okay, if you want to do a new project, this is step one, Click here, there's a step two, do this, there's a step three, watch out for this and that.

And I think that really helped to give them those guidelines okay, what was she saying again? Or how do we have to do that? And they can just go back to their page and say, oh yeah, exactly, that's how it was. And then it's really about getting to know the tool, because as I said, The longer we're working with it, the happier we become with it.

And it's really just after those first few experience, experiences that my teammate with that tool, it wasn't a problem anymore because they were all like, okay, this is pretty cool. So that made it a bit easier.

What's the biggest benefit of a research repository?

---

Daniele: And so what's one fear, that's like the most, fearless people had and which you've now see, oh, they.

Change their mind and say stuff like, Oh, now I can do this. And this is so cool. I didn't think about that. What's this hidden use case that you got from the more resistant people?

Vanessa: That's a really good question. I think one was really, wow, it's so much easier. Wow, I can use it.

It's understandable. So I think the interactions and the usage was just quite easy. But also to be honest, and I think that's what I think is great about the tool, but it's also A huge problem in other teams is that you have to work evidence-based. You can't just say this is my recommendation and you don't have any facts for it.

And I personally like that from the beginning on but now my team also said, wow, it makes so much sense. First I just do my facts. I close them, they're good. Then I'll go into my insights. I'll work on the insights. All right. I've done the insights check, and then I go to my recommendations. All right, I've got my recommendations check.

So I think. Working really with that system and being able to, reach small steps and checking them off a list, that's really some feedback that I got that was like, That's pretty cool. And also the collaboration, because we have projects now where we were able to say, all right, you're taking care of the facts, I can take care of the insights, and basically the third one can take care of the recommendations, because it was just so straightforward and it was obvious what we've had before and it wasn't just, this recommendation is just coming from What I've seen or heard, but it's not documented.

So it's really that evidence based and that step by step which I think are great use cases where you're like, okay, this is pretty cool.

What are the side benefits of a research respository?

---

Daniele: Yeah. It feels like the, there is a big positive side effect to having these research repositories, not just One source of throughs where you can just go and find past stuff and one way just to store everything, which is already a great use case.

But I think there is another thing in here, which is hidden between the lines, which is this, it forces a positive standardization, where we have to ask ourselves, I do my research work like that. Bob does it like that. And Marianne does it in that way. Why do we have three different ways?

Does it make sense? If it makes sense, how can we keep that freedom? If not, how can we take the best of all of these approaches and make it a bit of a template, and I feel this is an interesting thing because I see in many agencies and many organizations, you have this solo researcher mode where you do your work, you have your own ingredients, your own way of doing, and because you often work alone and on those projects you don't get stuck.

to see how some other people did it. And with the standard, you have to grow into it. And then you learn another way. But one thing which is also nice is that it's easier to pass from one product to the other without kind of restarting and say, Oh, I don't like the way that they built their insights in here.

So I'm going to have to go back in the sources. So this feels something quite interesting. Maybe give us a bit of a general view on what were the, a few of these standardizations that you felt were positives and maybe Where were the limits of these standardizations? Because I feel there is also a bit of a danger, the kind of a super rigid Swiss German mind.

I'm also a bit Swiss German, so I can say. When is it too rigid? And where do you feel it helps you bring standards that make your life a bit easier?

Vanessa: I think some of the standards were definitely the global tags, where we decided on are we using the Nielsen Norman heuristics, for example, or are we using the other ones, because they were both like, always around, and some of us preferred those, and some of us preferred the other ones, and saying there, all right, we're just using these right now, and everyone gets along with those, and there was, for example, one thing in standardization, which I think specifically make it, makes it now easier and it wasn't that much of a change.

It's just, preference some just like to work with one and the others with the other one. But as I said, when it's or somethings that are really rigid is when you stick to too much to that atomic research. And sometimes you do have to take, a step in between to be able to get from your, let's say, from your facts to your insight.

And that's, or a few tools, as I said, Leanly, for example, they were just so rigid and they thought that's a great thing because then everyone works like that. And we're like, but we need something right here in between. Otherwise it's just not going to work for us. And it's too rigid just In, going from one point to the other, so I think that was really one with it's also like user interface which, which was one thing which just didn't work for us with Gleanly in comments, we just have The flexibility that I said, and I think that makes quite an impact when it comes also to, your process and the standardizations that you implement.

One other thing which really helped, I think, was to have that checklist. At the beginning, so if you open a new project, you have your checklist, think about this, think about that. We've always worked like that, but it was never really explicit and we were now able to write that down. And, it's for one thing, it's a help for us because we know what to think about and make sure we've thought about everything we need to think about.

But on the other hand, it also helps us with our standardization because then we know, all right, everyone is Working through that to do list and making sure we have everything.

How can research repository help for onbarding of new talent?

---

Daniele: It feels to me that it really answers this question that I had when I was switching to another agency for work. And I came there and said, one of my first questions was, Okay, what's your playbook?

How do you do work here? And I think this is a question, that many service designers, have when they switch to another organization, which is okay, how is work done here? And such tools can really help that and make like the onboarding a little bit I think quicker, easier.

And that's where I will end this, which is. I feel you're really bringing this idea of standardization is great, this helps, but at the same time we still need this flexibility to bring in the little creative human touch where we say, Hey, in this specific project here, we need to tweak a little bit the things so that it really serves our purpose in the best.

I still have about 200 other questions on my sticky notes but maybe we'll cover them once in a live chat with a viewer or something like that. Sounds good. But for now, I will end the

Vanessa: session here.

Daniele: Absolutely. For now, I just want to say a big thank you to you. I really appreciate a lot your time, your energy, your passion, and all the research that you've done that you made public.

This is something that I really appreciate and I'm sure that the community will appreciate. And yeah, once again, if you, as a viewer wants to give back to to the team here, to Vanessa, to Evox check their website. I think it's one of the great resources about service design stuff written in German.

So for the Swiss people very relevant here because there's not so much content in high German and there, there is Some really good content, but at the same time in a simple language, which, German and simple, not always easy, and I think you mastered that pretty, pretty well.

Final Thoughts and Community Engagement

---

Daniele: Before we close, is there a last thought that you'd like to share with the community, a last call to action?

Vanessa: I think the saying of know your process doesn't just count when you want to implement a research repository. I think it's in general important, just as you said, Daniele, How are you doing work? How are you doing business and becoming aware of how you're working and why you're working that way? I think know your process is a great thing, which is great for research repositories, but also in general to make work easier, better and accelerate in whatever you do.

Daniele: Thank you so much for that very important reminder. Again, a big thank you to you for your time your motivation and everything that you did today.

Vanessa: Thank you so much for having me.

Daniele: And have a lovely. Rest of the day. Bye bye.

This webinar transcript was generated automatically. Therefore, it will contain errors and funny sentences.

Share your thoughts

0 RepliesPlease login to comment